Facebook: Trump can’t recruit ‘army’ of poll watchers under new voter intimidation rules

In a blog post Wednesday, Facebook said it will no longer allow content that encourages poll watching that uses “militarized” language or intends to “intimidate, exert control, or display power over election officials or voters.” Facebook credited the update to its platform rules to civil rights experts who it worked with to create the policy.

Facebook Vice President of Content Policy Monika Bickert elaborated on the new rules in a call with reporters, noting that wording would prohibit posts that use words like “army” or “battle” — a choice that appears to take direct aim at the Trump campaign’s effort to recruit an “army for Trump” to watch the polls on election day. Last month, Donald Trump Jr. called for supporters to “enlist now” in an “army for Trump election security operation” in a video that was posted on Facebook and other social platforms.

“Under the new policy if that video were to be posted again, we would indeed remove it,” Bickert said.

The company says that while posts calling for “coordinated interference” or showing up armed at polling places are already targeted for removal, the expanded policy will more fully address voter intimidation concerns. Facebook will apply the expanded policy going forward but it won’t affect content already on the platform, including the Trump Jr. post.

Poll watching to ensure fair elections is a regular part of the process, but weaponizing those observers to seek evidence for unfounded claims about “fraudulent ballots” and a “rigged” election is something new — and something more akin to voter intimidation. Poll watching laws vary by state and some states limit how many poll watchers can be present and how they must identify themselves.

Trump has repeatedly failed to say he will accept the election results in the event that he loses, a position that poses an unprecedented threat to the peaceful transition of power in the U.S. That concern is one of many that social media companies and voting rights advocates are anxiously keeping an eye on as election day nears.

“Donald Trump is not interested in election integrity, he’s interested in voter suppression,” VoteAmerica Founder Debra Cleaver said of the Trump campaign’s poll watching efforts. “Sending armed vigilantes to the polls is a solution to a problem that doesn’t exist, unless you believe that Black and Brown people voting is a problem.”

Facebook is also making some changes to its rules around political advertising. The company will no longer allow political ads immediately following the election in an effort to avoid chaos and false claims.

“… While ads are an important way to express voice, we plan to temporarily stop running all social issue, electoral, or political ads in the U.S. after the polls close on November 3, to reduce opportunities for confusion or abuse,” Facebook Vice President of Integrity Guy Rosen wrote in a blog post. Rosen added that Facebook will let advertisers know when those ads are allowed again.

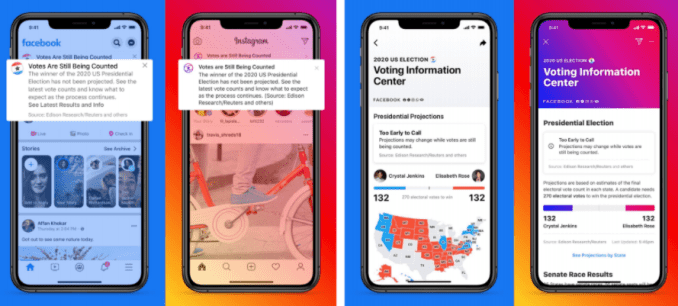

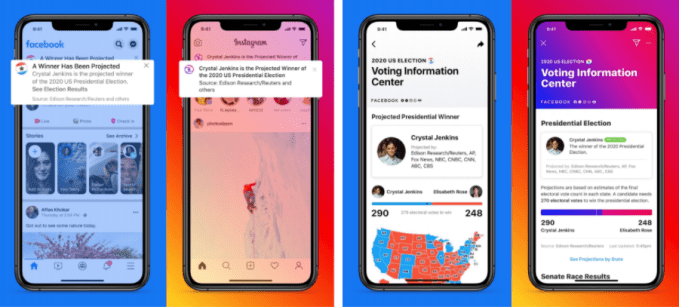

Facebook also provided a glimpse of what its apps will look like on what might shape up to be an unusual election night. The company will place a notification at the top of the Instagram and Facebook apps with the status of the election in an effort to broadly fact-check false claims.

Images via Facebook

Those messages will remind users that “Votes are still being counted” before switching over to a message that “A winner has been projected” after a reliable consensus emerges about the race. Because the results of the election may not be apparent on election night this year, it’s possible that users will see these messages beyond November 3. If a candidate declares a premature victory, Facebook will add one of these labels to that content.

Facebook also noted that it is now using a viral content review system, a measure designed to prevent the many instances in which misinformation or otherwise harmful content racks up thousands of views before eventually being removed. Facebook says the tool, which it says it has relied on “throughout election season,” provides a safety net that helps the company detect content that breaks its rules so it can take action to limit its spread.

In the final month before the election, Facebook is notably showing less hesitation toward policing misinformation and other harmful political content on its platform. The company announced Tuesday that it would no longer allow the pro-Trump conspiracy theory known as QAnon to flourish there, as it has over the last four years. Facebook also removed a post this week in which President Trump, fresh out of a multi-day hospital stay, claimed that COVID-19 is “far less lethal” than the flu.

✍ Credit given to the original owner of this post : ☕ Social – TechCrunch

🌐 Hit This Link To Find Out More On Their Articles...🏄🏻♀️ Enjoy Surfing!

Post a Comment