Facebook drafts a proposal describing how its new content review board will work

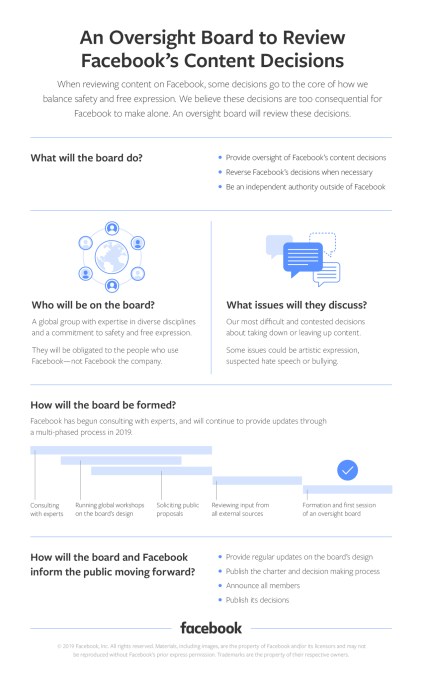

In November, Facebook announced a new plan that would revamp how the company makes content policy decisions on its social network — it will begin to pass off to an independent review board some of the more contested decisions. The board will serve as the final level of escalation for appeals around reported content, acting something like a Facebook Supreme Court. Today, Facebook is sharing (PDF) more detail about how this board will be structured, and how the review process will work.

Facebook earlier explained that the review board wouldn’t be making the first — or even the second — decision on reported content. Instead, when someone reports content on Facebook, the first two appeals will still be handled by Facebook’s own internal review systems. But if someone isn’t happy with Facebook’s decision, the case can make its way to the new review board to consider.

However, the board may not decide to take on every case that’s pushed up the chain. Instead, it will focus on those it thinks are the most important, the company had said.

Today, Facebook explains in more detail how the board will be staffed and how its decisions will be handled.

In a draft charter, the company says that the board will include experts with experience in “content, privacy, free expression, human rights, journalism, civil rights, safety, and other relevant disciplines.” The member list will also be public, and the board will be supported by a full-time staff that will ensure its decisions are properly implemented.

While decisions around the board makeup haven’t been made, Facebook is today suggesting the board should have 40 members. These will be chosen by Facebook after it publicly announces the required qualifications for joining, and says it will offer special consideration to factors like “geographical and cultural background,” and a “diversity of backgrounds and perspectives.”

The board will also not include former or current Facebook employees, contingent workers of Facebook or government officials.

Once this board is launched, it will be responsible for the future selection of members after members’ own terms are up.

Facebook believes the ideal term length is three years, with the term automatically renewable one time, for those who want to continue their participation. The board members will serve “part-time,” as well — a necessary consideration as many will likely have other roles outside of policing Facebook content.

Facebook will ultimately allow the board to have final say. It can reverse Facebook’s own decisions, when necessary. The company may then choose to incorporate some of the final rulings into its own policy development. Facebook may also seek policy advice from the board, at times, even when a decision is not pressing.

The board will be referred cases both through the user appeals process, as well as directly from Facebook. For the latter, Facebook will likely hand off the more controversial or hotly debated decisions, or those where existing policy seems to conflict with Facebook’s own values.

To further guide board members, Facebook will publish a final charter that includes a statement of its values.

The board will not decide cases where doing so would violate the law, however.

Cases will be heard by smaller panels that consist of a rotating, odd number of board members. Decisions will be attributed to the review board, but the names of the actual board members who decided an individual case will not be attached to the decision — that’s likely something that could protect them from directed threats and harassment.

The board’s decisions will be made public, though it will not compromise user privacy in its explanations. After a decision is issued, the board will have two weeks to publish its decision and explanation. In the case of non-unanimous decisions, a dissenting member may opt to publish their perspective along with the final decision.

Like a higher court would, the board will reference its prior opinions before finalizing its decision on a new case.

After deciding their slate of cases, the members of the first panel will choose a slate of cases to be heard by the next panel. That panel will then pick the third slate of cases, and so on. A majority of members on a panel will have to agree that a case should be heard for it to be added to the docket.

Because 40 people can’t reasonably represent the entirety of the planet, nor Facebook’s 2+ billion users, the board will rely on consultants and experts, as required, in order to gather together the necessary “linguistic, cultural and sociopolitical expertise” to make its decisions, Facebook says.

To keep the board impartial, Facebook plans to spell out guidelines around recusals for when a conflict of interest develops, and it will not allow the board to be lobbied or accept incentives. However, the board will be paid — a standardized, fixed salary in advance of their term.

None of these announced plans are final, just Facebook’s initial proposals.

Facebook is issuing them in draft format to gather feedback and says it will open up a way for outside stakeholders to submit their own proposals in the weeks ahead.

The company also plans to host a series of workshops around the world over the next six months, where it will get various experts together to talk about issues like free speech and technology, democracy, procedural fairness and human rights. The workshops will be held in Singapore, Delhi, Nairobi, Berlin, New York, Mexico City and other cities yet to be announced.

Facebook has been criticized for its handling of issues like the calls to violence that led to genocide in Myanmar and riots in Sri Lanka; election meddling from state-backed actors from Russia, Iran and elsewhere; its failure to remove child abuse posts in India; the weaponization of Facebook by the government in the Philippines to silence its critics; Facebook’s approach to handling Holocaust denials or conspiracy theorists like Alex Jones; and much more.

Some may say Facebook is now offloading its responsibility by referring the tough decisions to an outside board. This, after all, could potentially save the company itself from being held accountable for war crimes and the like. But on the other hand, Facebook has not shown itself capable of making reasonable policy decisions related to things like hate speech and propaganda. It may be time for it to bring in the experts, and let someone else make the decisions.

✍ Source : ☕ Social – TechCrunch

To continue reading click link or copy to web server. :

(✿◠‿◠)✌ Mukah Pages : 👍 Making Social Media Marketing Make Easy Through Internet Auto-Post System. Enjoy reading and don't forget to 👍 Like & 💕 Share!

Post a Comment